Can LLM Simulations Truly Reflect Humanity? A Deep Dive

Simulation powered by Large Language Models (LLMs) has become a promising method for exploring complex human social behaviors. However, the application of LLMs in simulations presents significant challenges, particularly regarding their capacity to accurately replicate the complexities of human behaviors and societal dynamics, as evidenced by recent studies highlighting discrepancies between simulated and real-world interactions. This blog rethinks LLM-based simulations by emphasizing both their limitations and the necessities for advancing LLM simulations. By critically examining these challenges, we aim to offer actionable insights and strategies for enhancing the applicability of LLM simulations in human society in the future.

Introduction

With the approximate human knowledge, large language models have revolutionized the way of simulations of social and psychological phenomena

However, there is a notable lack of comprehensive studies examining whether LLM simulations can accurately reflect real-world human behaviors. Some studies have explored this dimension from various angles. First, recent studies

In this post, we delve into the limitations of LLM-driven social simulations. We discuss and summarize the challenges these models face in capturing human psychological depth, intrinsic motivation, and ethical considerations. These challenges provide insights for future LLM evaluation and development. Nevertheless, we compare the traditional simulation and the LLM-based simulation, and find that the LLM-based simulation is still a significantly potential direction due to their low costs compared to humans, scalability, and the ability to simulate emergent behaviors. Furthermore, we propose future research directions to better align LLM simulations with humans.

Limitations in Modeling Human

Some recent works employ LLMs to model human behaviors, such as Simucara, which simulates a town to observe social dynamics

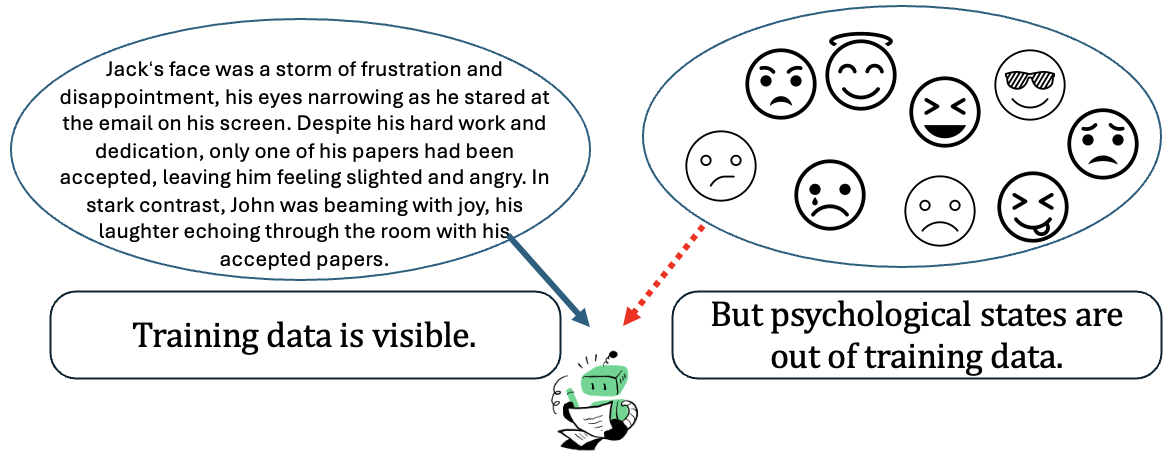

- Training datasets lack inner psychological states. The training datasets used for LLMs often do not include nuanced representations of inner psychological states. This limitation becomes particularly evident when LLMs are tasked with simulating diverse psychological types or personalities, as they lack intrinsic motivations that drive human decision-making. Humans make decisions based on not only the rationale and logics, but also their personal psychological states. Collecting datasets that accurately represent inner psychological states is challenging in real-world settings. Consequently, LLM training data often lacks the depth needed to capture the complexities of human psychology. Can LLM simulate these states without getting enough data related to them?

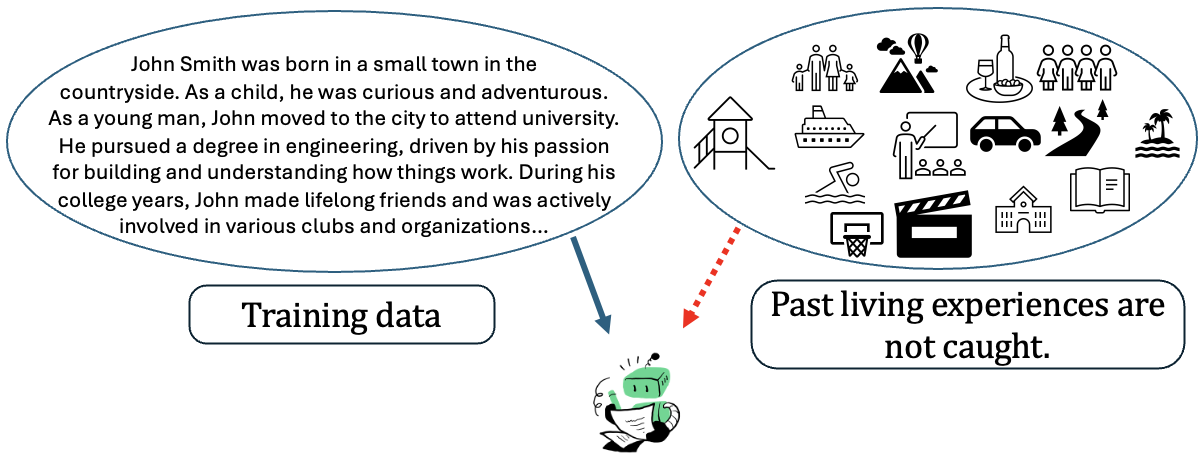

- Training datasets lack personal past living experiences. Additionally, training datasets also lack comprehensive life histories, which significantly impact individual decision-making. For instance, someone with a past experience of betrayal may develop tendencies that influence their future interactions

.

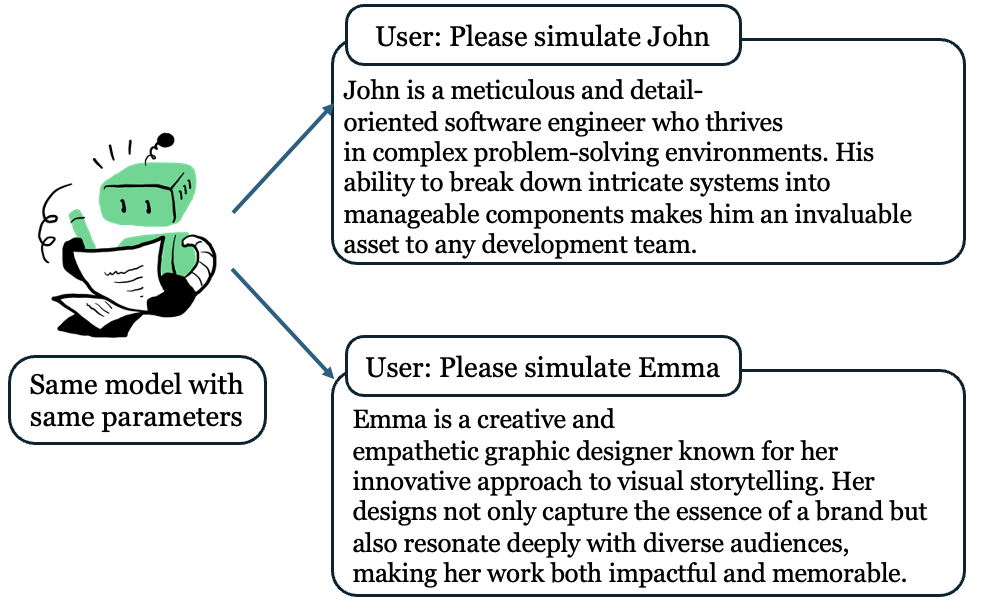

- Not sure whether using the same LLM can simulate different persons. Using the same LLM model, such as black-box GPT-4, to simulate multiple agents means these agents inherently share the same foundational knowledge, making it challenging to create distinct, authentically varied personalities. The absence of personal psychological states, individual thoughts, and unique life experiences means that LLMs tend to mirror a generalized human cognition rather than capturing distinct individual personalities. Consequently, a critical question arises: Can a single LLM genuinely simulate diverse psychological profiles? While prompts might guide an LLM to adopt varied behaviors, the model’s core knowledge remains unchanged, raising doubts about the depth of psychological diversity that can be simulated.

Absence of Human Incentives

Except for the psychological states, another significant factor that profoundly influences human behaviors is the incentive structure of humans, like survival, financial security, social belonging, emotional fulfillment, and self-actualization—each varying in intensity among individuals. Human decisions are shaped not only by immediate circumstances but also by intrinsic motivations, goals, and desires that vary widely among individuals

These incentives are essential for replicating realistic human behavior, as they drive diverse responses to similar situations, enable goal-oriented decision-making, and influence the trade-offs people make based on personal values and life experiences

-

Lacking human incentive datasets. Similar with the psychological states, collecting the human incentive datasets is difficult. First, people may not be willing to share with their true incentives and personal goals. Second, in different time, humans may have varying goals. Third, many people do not really know what they want, the motivation is hiddened in their subconscious

. It is hard to express them as the natural language to encode into LLMs. -

Representing incentives with the next-word prediction. Even we have data about human inner incentives, it is hard to model the relationships between incentives and the decisions using the next-word predition training paradigm

<\d-cite>. The next-word prediction paradigm is ill-suited for modeling incentive-based behavior. Human incentives involve complex, often subconscious relationships between past experiences, emotions, and anticipated future outcomes, which shape individual decision-making in subtle, dynamic ways. Simulating such intricate, motivation-driven behaviors would require a model capable of understanding and prioritizing internal goals, a capability far beyond current LLMs’ design. Thus, while LLMs offer impressive results in language tasks, their reliance on statistical prediction, rather than intrinsic motivation, creates a gap between simulated and authentic human behavior.

Bias in Training Data

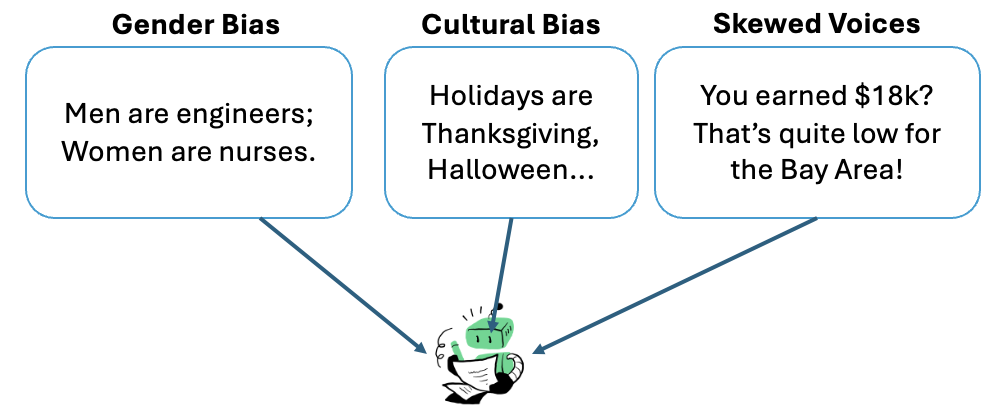

LLMs provide a unique means to simulate large-scale social processes, such as idea dissemination, network formation, or political movement dynamics. The responses of LLMs represent their knowledge learned from the training datsets. Thus, the bias in the training data of LLMs is a significant concern

-

Cultural bias. For example, training data is predominantly sourced from English-speaking countries, leading to a limited understanding of diverse languages, cultures, and societal norms

. This geographic and cultural imbalance can result in outputs that marginalize or misrepresent non-Western perspectives. -

Occupational and socioeconomic bias. Another critical issue is occupational and socioeconomic bias. Workers in industries such as manufacturing or agriculture, who often have limited digital footprints, are frequently excluded from datasets. As a result, the lived experiences of these groups are underrepresented, leading to LLM outputs that fail to reflect their perspectives or address their needs—despite these individuals constituting a significant portion of human society.

-

Gender bias. Gender bias is also evident in LLM training data, with studies showing that models are more likely to generate male-associated names and roles, reinforcing stereotypes. For example, LLMs are 3-6 times more likely to choose an occupation that stereotypically aligns with a person’s gender

. Similarly, class bias emerges in outputs that favor affluent individuals or highlight experiences and values associated with wealth, as data on the Internet disproportionately reflects the views and experiences of those familiar with and active in digital spaces . -

Skewed voice. These biases stem from the reliance on internet-sourced data, which is inherently skewed toward the voices of digitally literate populations. As a result, LLMs reflect the biases present in the training data, amplifying inequalities and potentially excluding significant portions of human societies from being accurately represented.

Why Use LLM Simulations Despite Their Many Limitations?

Despite these limitations, LLMs represent a revolutionary advancement in the field of simulation, offering unique advantages that traditional methods cannot match. Traditional simulations have long been restricted by high costs

| Aspect | Traditional Simulation | LLM-Based Simulation |

|---|---|---|

| Cost | High: Requires significant financial and logistical resources, including human participants and infrastructure. | Low: Computationally efficient with no need for live participants. |

| Scalability | Limited: Expensive and resource-intensive to scale up. | High: Can simulate large-scale environments with minimal additional cost. |

| Flexibility | Rigid: Constrained by predefined rules and models. | Adaptive: Generates emergent behaviors and adapts to diverse scenarios. |

| Ethical Concerns | High: Ethical issues arise from involving live participants or animals in sensitive experiments. | Low: Avoids ethical concerns by simulating behaviors without real-world involvement. |

| Bias and Representation | Controlled: Biases depend on the initial design of the simulation. | High Risk: Reflects and amplifies biases in training data. |

| Data Requirements | Specific: Requires custom data collection and modeling for each scenario. | Broad: Utilizes vast, pre-trained datasets but lacks scenario-specific granularity. |

| Interpretability | High: Clear causal relationships based on predefined rules. | Moderate: Decisions are derived from complex patterns, making causality harder to trace. |

| Realism | Moderate: Captures predefined behaviors but struggles with emergent phenomena. | Variable: Capable of emergent phenomena but limited by training data and lack of intrinsic motivation. |

| Use Case Complexity | Limited: Best suited for scenarios with well-defined rules and parameters. | High: Suitable for complex, open-ended scenarios with adaptive behaviors. |

| Time to Develop | Long: Requires significant time to design, test, and validate models. | Short: Pre-trained LLMs reduce development time, with additional fine-tuning as needed. |

| Potential for Innovation | Moderate: Limited by predefined parameters and models. | High: Generates unexpected insights through emergent patterns. |

Cost Efficiency and Scalability

Traditional simulations, especially those involving complex human behavior, require significant financial and logistical resources, often involving teams of experts, infrastructure, and, in some cases, live participants. For instance, compensation in Singapore typically ranges from 10 to 30 Singapore dollars per hour per person. Simulating a society with 1,000 individuals would therefore incur costs between 10,000 and 30,000 Singapore dollars, representing a substantial expense. LLM-based simulations, on the other hand, are computationally efficient and can run on a large scale without the need for human participants. This makes them more accessible and affordable for researchers, enabling extensive studies across diverse scenarios and repeated simulations at a fraction of the cost.

Unexpected and Emergent Results

LLMs have the unique ability to produce “out-of-the-box” results, generating insights that might not emerge in a structured, rule-based simulation

Simulating Unconventional Scenarios

LLM-based simulations can achieve scenarios that traditional methods struggle to replicate. For example, simulating human society under conditions of anarchy or alien societal structures

Reduced Ethical Concerns

Traditional human-centered simulations can pose ethical challenges, often requiring participants to experience stress, discomfort, or other adverse conditions for experimental purposes. For example, psychological experiments like the Stanford Prison Experiment

Need of LLM Multi-agent System

There is growing research interest in LLM-based multi-agent systems

In conclusion, despite the notable limitations of LLMs, their strengths in cost efficiency, scalability, and adaptability position them as transformative tools for advancing simulation research across various fields, including sociology, economics, and psychology. Future research should focus on integrating LLMs with agent systems and enhancing their personalization to create more authentic simulations.

How Can We Align LLMs More Closely with Human Societies?

After highlighting LLM’s necessasity in simulating, we discuss on how to align LLMs more closely with human societies. Key directions include enriching training data with nuanced psychological and experiential insights, improving the design of agent-based systems, creating realistic and meaningful simulation environments, and externally injecting societal knowledge.

Enriching Training Data with Personal Psychological States and Life Experiences

One foundational approach is to incorporate data that reflects a broader spectrum of human psychological states, personal thoughts, and lived experiences. While current LLMs are trained on general information from diverse sources, this data often lacks depth in representing individual cognition and emotional states. Adding more personalized content, such as reflective diaries or first-person narratives that capture inner motivations, fears, and aspirations, could help the model simulate more realistic human behaviors. Incorporating varied life experiences can also create a richer model that better captures how past events influence decision-making and personality development over time. Personalized LLMs represent a promising direction for simulating more realistic human behaviors by incorporating concrete life experiences and individual psychological profiles

Improving Agent System Design

If we believe agent-based LLM simulations can simulate complex human societies and finish complex tasks, a crucial area of focus is the design of the agents themselves. Research can aim to develop reward functions that encourage agents to make decisions that mirror human behavior more accurately, and can developing the mechanism how to prevent the malacious actions propagrate, balancing short-term and long-term incentives similar to real human decision-making. Additionally, enhancing agent autonomy—such as allowing agents to learn from simulated life experiences, adapt to new environments, and develop unique ‘personalities’—can improve their capacity to replicate diverse behaviors. This could involve adding emotion-like functions or “memories” that allow agents to respond adaptively based on prior interactions, similar to humans.

Careful Simulation Environment Design

The design of the simulation environment significantly affects agent behavior and the outcomes of the simulation. By creating environments that reflect the social, economic, and psychological complexities of human societies, agents can be more likely to engage in behaviors that resonate with human decision-making processes. For example, simulations can introduce social roles, resource scarcity, and moral dilemmas that prompt agents to make trade-offs and prioritize long-term goals over short-term gains. Personalized LLMs and retrieval-augmented generation (RAG)-based simulations can be used to dynamically provide agents with relevant information about the simulated society

External Injection of Societal Knowledge and Values

Another promising direction is to externally inject curated societal knowledge and values into LLMs. This could be done through targeted fine-tuning or post-processing steps that embed specific ethical principles, cultural norms, and societal rules within the model’s decision-making framework. Such an approach would require LLMs to access structured knowledge bases and value systems that reflect human societal complexities, allowing them to make decisions aligned with social norms or ethical standards. For example, by integrating modules on ethics, cultural diversity, and societal roles, LLMs could better understand and reflect the diverse values that drive human societies.

Developing Robust Evaluation Metrics

To ensure that LLMs align closely with human societies, it is essential to develop robust evaluation metrics that assess not only the accuracy but also the depth and contextual relevance of simulated human behavior. For instance, metrics could include alignment with established psychological theories, diversity of agent responses, and the stability of social systems over time. Metrics could include factors like alignment with human moral reasoning, diversity of responses across agents, and the stability of simulated social systems over time. Robust benchmarks that measure how closely agents’ actions mirror real-world human behaviors would allow researchers to refine LLMs more effectively, continuously improving their realism and applicability in social simulations.

LLM-based Simulations in Cryptocurrency Trading

In this section, we analyze a case study of cryptocurrency trading simulations to illustrate the potential and limitations of LLM-based simulations.

Using LLMs to Simulate Human Buy/Sell Behaviors in a Cryptocurrency Market

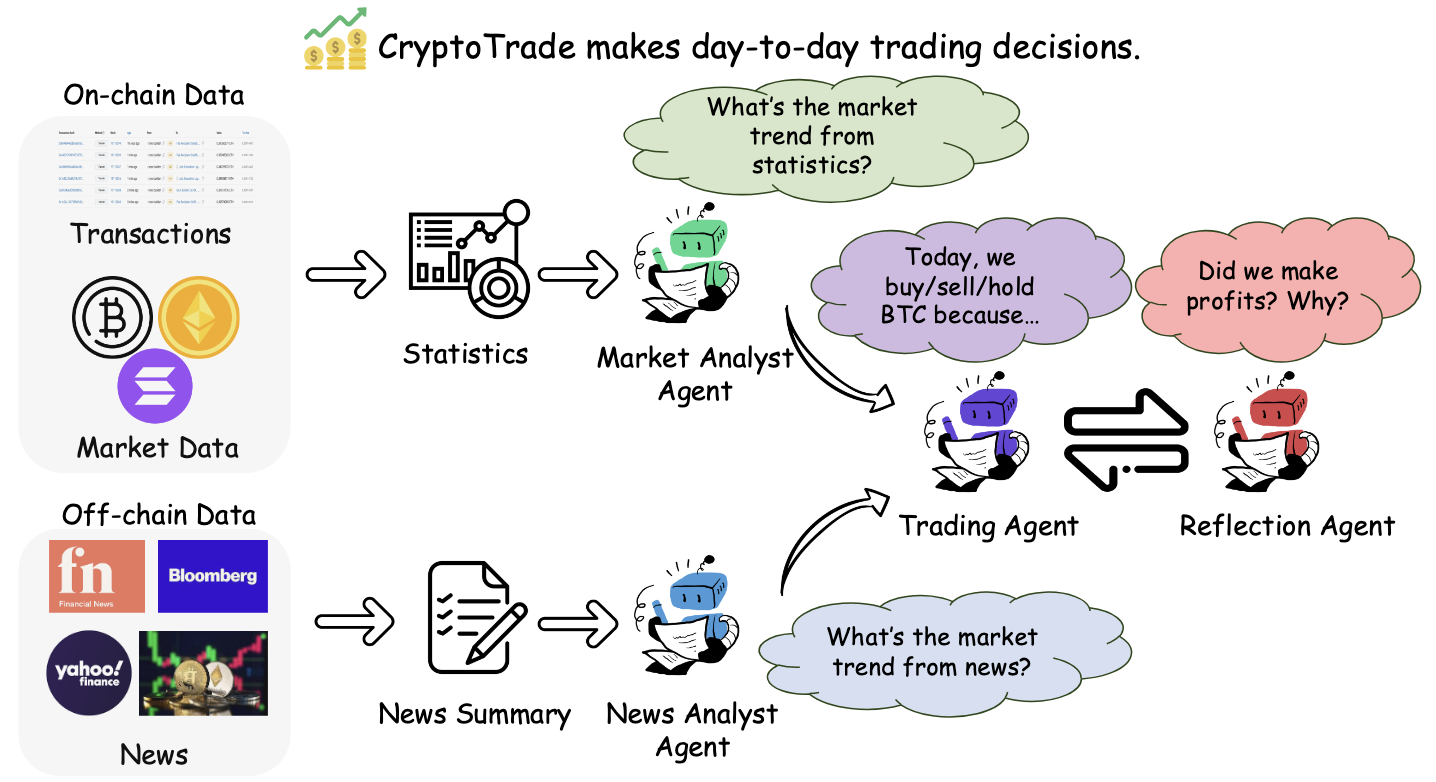

CryptoTrade is an LLM-based trading agent designed to enhance cryptocurrency market trading by integrating both on-chain and off-chain data analysis. It leverages the transparency and immutability of on-chain data, along with the timeliness and influence of off-chain signals, such as news, to offer a comprehensive view of the market. CryptoTrade also incorporates a reflective mechanism that refines its daily trading decisions by assessing the outcomes of previous trades. It simulates the buy and sell behaviors of human traders in the cryptocurrency market. An overview of this simulation is shown in the figure below

And the result of this simulation on the Ethereum market compared with other trading baselines is shown in the figure below

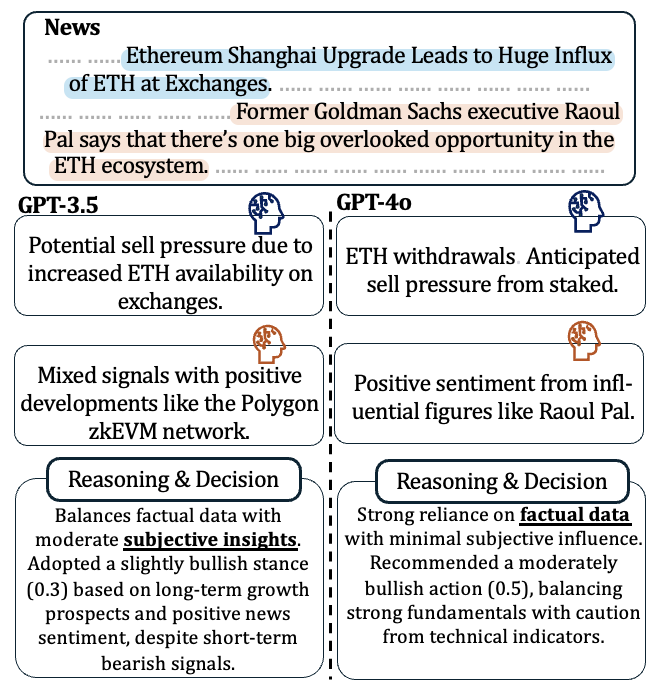

To gain deeper insights into why CryptoTrade takes specific actions, we extract reasoning process from its simulation logs. These logs reveal how GPT-3.5 and GPT-4o respond to the same news event: Ethereum Shanghai Upgrade.

Key Observations

We summarize the key observations of the CryptoTrade simulation performance and reasoning processes as follows:

- LLM Simulation Can’t Outperform Buy and Hold: In a bear market, CryptoTrade lags behind the Buy and Hold strategy by approximately 2%, highlighting a significant limitation. While LLMs are expected to outperform human traders, the results do not align with this expectation.

- Inherent Bias: During trading, CryptoTrade exhibited a tendency to prioritize factual information signals over sentiment-based information. While this approach can be advantageous in a bull market, it proves less effective in a bear market. For instance, in Ethereum trading, CryptoTrade outperformed the Buy and Hold strategy by 3%, likely due to its inherent factual bias. However, this bias is less suited for bear markets, where profitability often requires selling assets proactively at the first signs of a downturn in the social media.

- Herd Behavior: When multiple simulation agents in CryptoTrade relied on the same LLM-based models, they often made identical decisions, which could amplify market movements rather than creating realistic market dynamics.

Lessons Learned

This case study provides several insights about LLM simulations:

-

Hybrid Approaches Needed: The most effective simulations might combine LLM agents with some form of human oversight or intervention, which can be injected as the format of RAG, especially for handling extreme market conditions.

-

Bias Mitigation: To enable LLM simulations to better replicate realistic human behaviors, it is essential to address the factual preference biases inherent in LLMs and to incorporate societal knowledge and values into their design and training.

-

Evaluation Metrics: Currently, the evaluation metric is solely focused on return-related mathematical metrics in trading. However, what if different individuals prefer different trading styles or strategies? How can we assess the performance of LLM simulations in such scenarios? If we aim to simulate a realistic cryptocurrency market with diverse traders, what evaluation metrics should be used?

Conclusion

This blog highlights the limitations of LLM simulations in aligning with human behavior, encouraging deeper reflection on their ability to model the complexity of human societies. At present, LLM-based simulations offer significant potential for research, combining cost efficiency, flexibility, and the capacity to model intricate societal dynamics in innovative ways. However, addressing ethical concerns, such as bias and representation, is essential to ensure these simulations contribute positively and equitably to our understanding of human behavior. To better align LLM simulations with human societies, future research should focus on mitigating inherent biases, enhancing personalization, creating realistic environments, and developing reliable metrics to produce more authentic and impactful simulations.

Acknowledgements

This project is supported by the MOE Academic Research Fund (AcRF) Tier 1 Grant in Singapore (Grant No. T1 251RES2315).