Pre-training of Foundation Adapters for LLM Fine-tuning

Adapter-based fine-tuning methods insert small, trainable adapters into frozen pre-trained LLMs, significantly reducing computational costs while maintaining performance. However, despite these advantages, traditional adapter fine-tuning suffers from training instability due to random weight initialization. This instability can lead to inconsistent performance across different runs. Therefore, to address this issue, this blog post introduces pre-trained foundation adapters as a technique for weight initialization. This technique potentially improves the efficiency and effectiveness of the fine-tuning process. Specifically, we combine continual pre-training and knowledge distillation to pre-train foundation adapters. Experiments confirm the effectiveness of this approach across multiple tasks. Moreover, we highlight the advantage of using pre-trained foundation adapter weights over random initialization specifically in a summarization task.

Introduction

Adapter-based fine-tuning methods have revolutionized the customization of large language models (LLMs) by inserting small, trainable adapters inside block layers of frozen pre-trained LLMs

The issue here is that random initialization of adapter weights in fine-tuning leads to training instability and inconsistent performance across different runs

Pre-training of Foundation Adapters

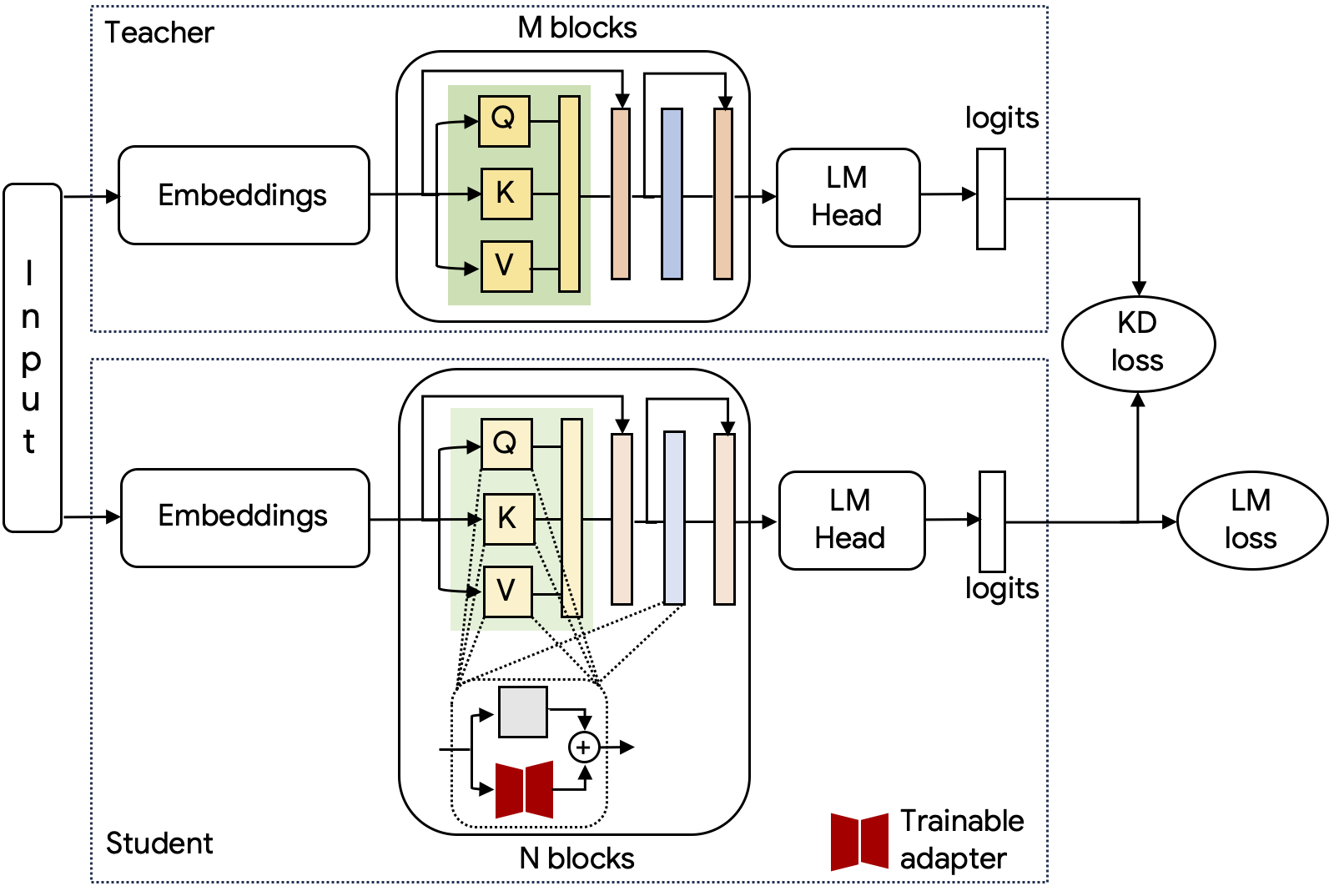

Given a “frozen” pre-trained LLM \(S\) with trainable adapters \(A\) attached to each of its block layers, our research question is: How can we pre-train the adapters \(A\)? We may follow a previous approach

-

Knowledge distillation (KD): A larger, frozen pre-trained LLM is employed as the teacher model to facilitate knowledge distillation, transferring its knowledge to a smaller student model that consists of the frozen \(S\) augmented with trainable adapters \(A\). Specifically, the Kullback-Leibler divergence is used to measure the difference between the LM head logits of the teacher and student models, resulting in a corresponding knowledge distillation loss \(\mathcal{L}_{\text{KD}}\) during training, which guides the student to mimic the teacher’s output distributions.

-

Continual pre-training (CPT): We continually pre-train the student model (here, \(S\) with adapters \(A\)) using a causal language modeling objective. By keeping all the original weights of \(S\) frozen and updating only the adapters’ weights, we efficiently adapt the model to new data without overwriting its existing knowledge. A corresponding cross-entropy loss \(\mathcal{L}_{\text{LM}}\) is computed based on the LM head logit outputs of the student model during training.

-

Joint training: The knowledge distillation and continual pre-training tasks are jointly optimized on a text corpus, with the final objective loss computed as a linear combination of the knowledge distillation loss and the cross-entropy loss: \(\mathcal{L} = \alpha\mathcal{L}_{\text{KD}} + (1 - \alpha)\mathcal{L}_{\text{LM}}\).

Experiments

General setup

In all experiments, we use Low-Rank Adaptation (LoRA)

In a preliminary experiment, we perform continual pre-training using the “frozen” Llama-3.2-1B as the student model with LoRA rank 8 on the QuRatedPajama-260B dataset

Knowledge distillation helps improve performance

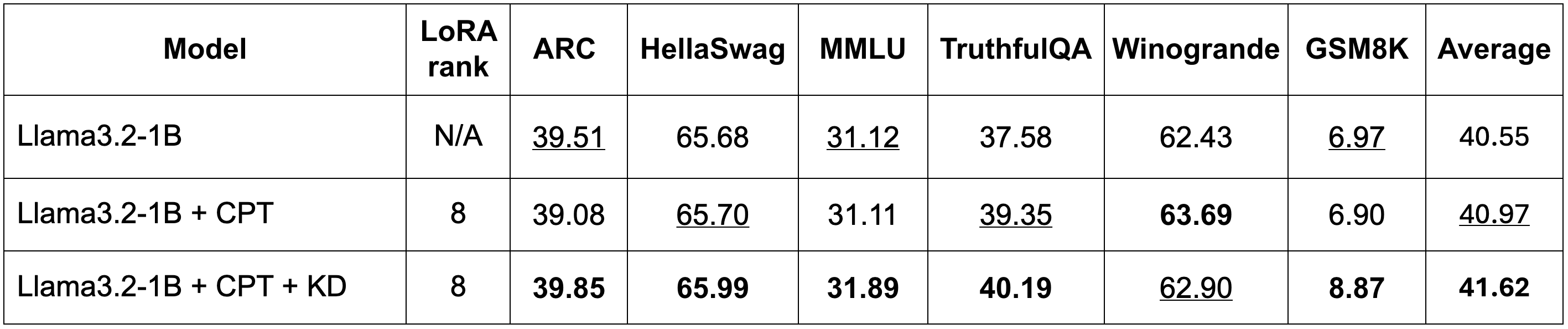

To investigate the effectiveness of knowledge distillation, our initial experiments utilize the student model Llama-3.2-1B with trainable LoRA of rank 8 and employ Llama-3.1-8B-Instruct

Table 1 presents results obtained on 6 key Open LLM benchmarks using the Eleuther AI Language Model Evaluation Harness, including AI2 Reasoning Challenge (ARC; 25-shot)

Compared to the baseline model Llama-3.2-1B, applying CPT alone reveals small performance changes in most benchmarks, with an average increase from 40.55 to 40.97. This improvement is primarily due to increases in TruthfulQA (from 37.58 to 39.35) and Winogrande (from 62.43 to 63.69), while the remaining benchmarks exhibit negligible or negative changes. In contrast, the model with both CPT and KD demonstrates a more substantial improvement over applying CPT alone. This is evident in the increased average score of 41.62, driven by improvements in MMLU, TruthfulQA, and particularly GSM8K, which increases from 6.90 to 8.87. These results suggest that combining CPT with KD yields more comprehensive performance improvements across multiple tasks.

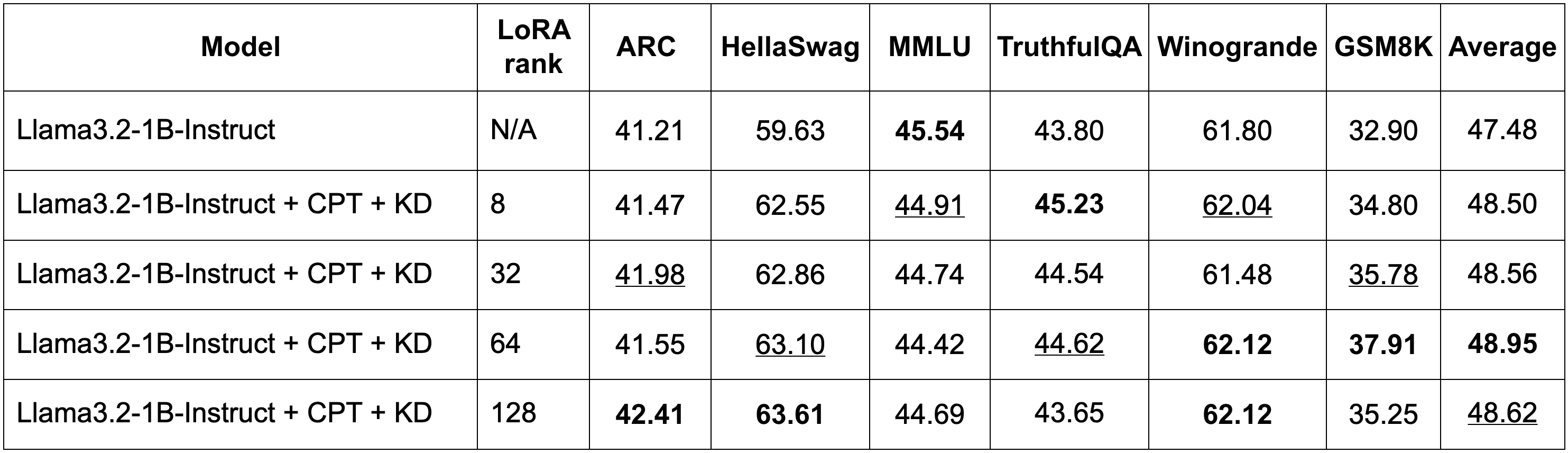

Effects of different LoRA ranks

We study the effects of different LoRA ranks—8, 32, 64, and 128—using the student model Llama-3.2-1B-Instruct and the teacher model Llama-3.1-8B-Instruct, applying both CPT and KD. Table 2 shows the obtained results on the 6 key Open LLM benchmarks, revealing improvements of over 1.0 points across all ranks compared to the baseline Llama-3.2-1B-Instruct. The model with a LoRA rank of 64 stands out as the best performer, achieving an average score of 48.95 and excelling in the GSM8K benchmark with a score of 37.91. Notably, the overall average scores—48.50, 48.56, 48.95, and 48.62 for ranks 8, 32, 64, and 128, respectively—show minimal variation, indicating that changes in LoRA rank have little impact on performance differences. Benchmarks such as ARC and Winogrande also exhibit only slight fluctuations, while small improvements in GSM8K from rank 8 to 64 are not significant enough to indicate a clear advantage for any particular rank.

Use-case: Summarization

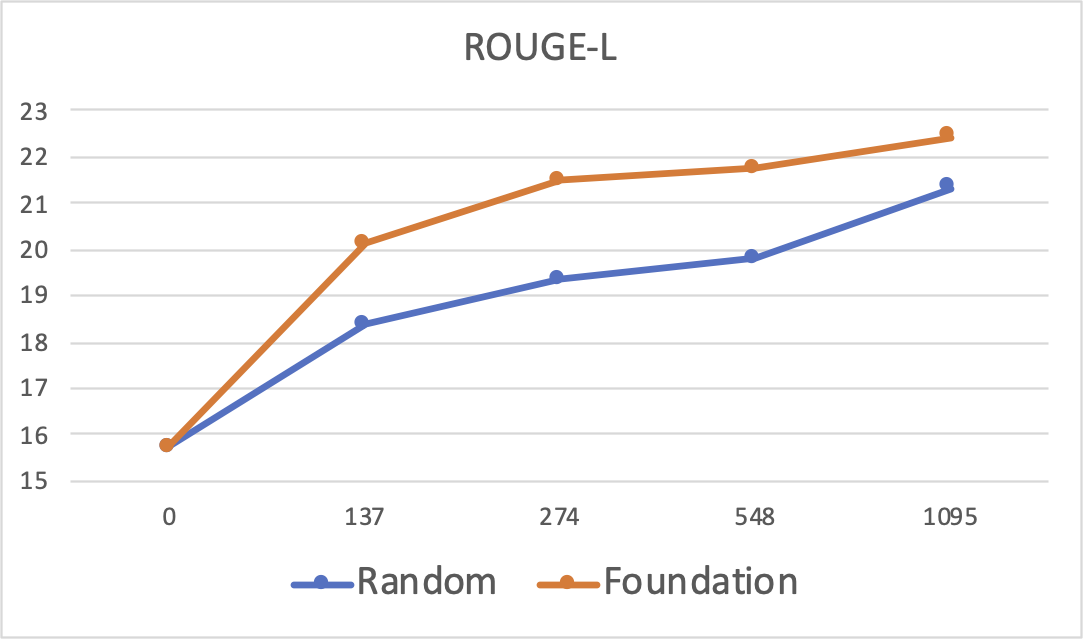

We also examine the effectiveness of pre-trained foundation LoRA adapters for downstream task fine-tuning, specifically supervised fine-tuning. We utilize the base LLM Llama-3.2-1B-Instruct and employ the QMSum dataset

Figure 2 presents the ROUGE-L results on the QMSum test set, when fine-tuning with varying numbers of training examples at 1095 (i.e. using the full QMSum training set), 548 (i.e. one half of the training set), 274 (i.e. one-fourth of the training set) and 137 (i.e. one-eighth of the training set). When the number of training examples is 0, the ROUGE-L score of 15.73 reflects the baseline model’s performance with zero-shot prompting. Clearly, fine-tuning significantly improves the summarization score, even with just over 100 training examples. Notably, using pre-trained LoRA weights for initialization consistently outperforms the random initialization across all training sizes, clearly demonstrating the effectiveness of foundation initialization from pre-trained LoRA for this specific use case.

Conclusion

In this blog post, we present a joint training approach that combines continual pre-training and knowledge distillation to pre-train foundation adapters for adapter weight initialization in LLM fine-tuning. Our experiments demonstrate that this approach achieves performance improvements across multiple tasks. Especially, we show that for a specific use case in summarization, using weight initialization from a pre-trained foundation LoRA enhances performance compared to random initialization. In future work, we plan to pre-train LoRA adapters for various models and evaluate these pre-trained adapters on additional downstream tasks.