Behavioral Differences in Mode-Switching Exploration for Reinforcement Learning

In 2022, researchers from Google DeepMind presented an initial study on mode-switching exploration, by which an agent separates its exploitation and exploration actions more coarsely throughout an episode by intermittently and significantly changing its behavior policy. We supplement their work in this blog post by showcasing some observed behavioral differences between mode-switching and monolithic exploration on the Atari suite and presenting illustrative examples of its benefits. This work aids practitioners and researchers by providing practical guidance and eliciting future research directions in mode-switching exploration.

1. Introduction

Imagine learning to ride a bicycle for the first time. This task requires the investigation of numerous actions such as steering the handlebars to change direction, shifting weight to maintain balance, and applying pedaling power to move forward. To achieve any satisfaction, a complex sequence of these actions must be taken for a substantial amount of time. However, a dilemma emerges: many other tasks such as eating, sleeping, and working may result in more immediate satisfaction (e.g. lowered hunger, better rest, bigger paycheck), which may tempt the learner to favor other tasks. Furthermore, if enough satisfaction is not quickly achieved, the learner may even abandon the task of learning to ride a bicycle altogether.

One frivolous strategy (Figure 1, Option 1) to overcome this dilemma is to interleave a few random actions on the bicycle throughout the remaining tasks of the day. This strategy neglects the sequential nature of bicycle riding and will achieve satisfaction very slowly, if at all. Furthermore, this strategy may interrupt and reduce the satisfaction of the other daily tasks. The more intuitive strategy (Figure 1, Option 2) is to dedicate significant portions of the day to explore the possible actions of bicycle riding. The benefits of this approach include testing the sequential relationships between actions, isolating different facets of the task for quick mastery, and providing an explicit cutoff point to shift focus and accomplish other daily tasks. Also – let’s face it – who wants to wake up in the middle of the night to turn the bicycle handlebar twice before going back to bed?

The above example elicits the main ideas of the paper When Should Agents Explore?

This introduction section continues with a brief discussion of topics related to mode-switching behavior policies, ranging from different temporal granularities to algorithms in the literature that exhibit mode-switching behavior. We emphasize practical understanding rather than attempting to present an exhaustive classification or survey of the subject. Afterwards, we discuss our motivation and rationale for this blog post: the authors of the initial mode-switching study showed that training with mode-switching behavior policies surpassed the performance of training with monolithic behavior policies on hard-exploration Atari games; we augment their work by presenting observed differences between mode-switching and monolithic behavior policies through supplementary experiments on the Atari benchmark and other illustrative environments. Possible avenues for applications and future investigations are emphasized throughout the discussion of each experiment. It is assumed that the interested reader has basic knowledge in RL techniques and challenges before proceeding to the rest of this blog post.

Mode-Switching Distinctions

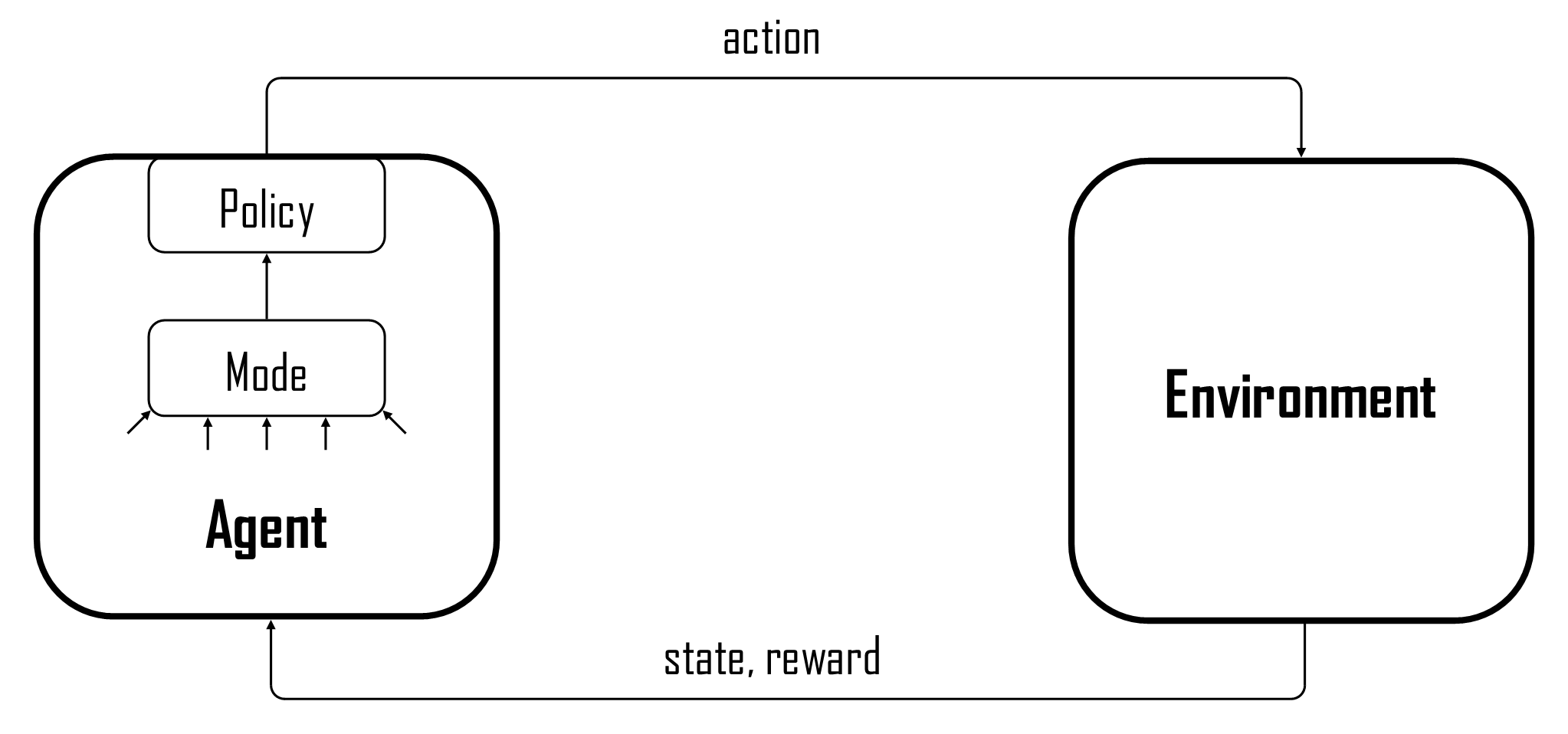

Mode-switching behavior policies (which we will sometimes shorten to switching policies, and likewise to monolithic policies) were explicitly introduced in the initial mode-switching study, and we will now focus on briefly contrasting switching policies against monolithic policies and the previous exploration literature. Figure 2 illustrates the high-level, pivotal difference between switching and monolithic policies: at the beginning of each time step, the agent may use all of its available information to determine its behavior mode for the current time step and then output a corresponding behavior policy to determine the action. A key distinction is that switching policies can drastically change between time steps since the modes can be tailored to a variety of different purposes (e.g. exploration, exploitation, mastery, novelty). As the graphic illustrates, switching is such a general addition to an algorithm that it was not exhaustively characterized in the initial study.

A mode period is defined as a sequence of time steps in a single mode. At the finest granularity, step-level periods only last one step in length; the primary example is $\epsilon$-greedy exploration because its behavior policy switches between explore and exploit mode at the level of one time step

The question investigated by the initial mode-switching study is when to switch. This blog post and the initial study only perform experiments with two possible modes, exploration and exploitation, so the question of when to switch reduces to the question of when to explore. Other questions regarding exploration include how much to explore that analyzes the proportion of exploration actions taken over the entire course of training. This problem encompasses the annealing of exploration hyperparameters including $\epsilon$ from $\epsilon$-greedy policies

Mode-Switching Basics

The preceding subsection narrowed our focus to determining when to explore using intra-episodic mode periods. At the time of publication of the initial mode-switching study, the previous literature contained a few works that had incorporated basic aspects of intra-episodic mode-switching exploration. For example, Go-Explore

The starting mode is the mode of the algorithm on the first time step, usually exploit mode. The set of behavior modes (e.g. explore and exploit) must contain at least two modes, and the set of behaviors induced by all modes should be fairly diverse. The switching trigger is the mechanism that prompts the agent to switch modes and is perhaps the most interesting consideration of switching policies. An informed trigger incorporates aspects of the state, action, and reward signals; it is actuated after crossing a prespecified threshold such as the difference between the expected and realized reward. A blind trigger acts independently of these signals; for example, it can be actuated after a certain number of time steps has elapsed or actuated randomly at each time step with a prespecified probability. A bandit meta-controller

| Mode-Switching Facet | Description |

|---|---|

| Starting Mode | Mode during first time step at episode start |

| Behavior Mode Set | Set of modes with diverse set of associated behavior policies |

| Trigger | Informs agent when to switch modes |

| Bandit Meta-Controller | Adapts switching hyperparameters to maximize episodic return |

| Homeostasis | Adapts switching threshold to achieve a target rate |

Blog Post Motivation

The initial mode-switching study performed experiments solely on 7 hard-exploration Atari games. The focus of the study was to show the increase in score on these games when using switching policies versus monolithic policies. One area of future work pointed out by the reviewers is to increase the understanding of these less-studied policies. For example, the meta review of the paper stated that an illustrative task may help provide intuition of the method. The first reviewer noted how the paper could be greatly improved through demonstrating specific benefits of the method on certain tasks. The second reviewer stated how discussing observed differences on the different domains may be useful. The third reviewer mentioned how the paper could be strengthened by developing guidelines for practical use. The last reviewer stated that it would be helpful to more thoroughly compare switching policies to monolithic policies for the sake of highlighting their superiority.

We extend the initial mode-switching study and progress towards further understanding of these methods in this blog post through additional experiments. The following experiments each discuss an observed behavioral difference in switching policies versus monolithic policies. We focus on behavioral differences in this work, as they are observable in the environment and are not unique to the architecture of certain agents

2. Experiments

This section begins with a discussion on the experimental setup before delving into five experiments that highlight observational differences in switching and monolithic behavior policies. The complete details of the agent and environments can be found in the accompanying GitHub repository.

- The experimental testbed is comprised of 10 commonly-used

Atari games: Asterix, Breakout, Space Invaders, Seaquest, Q*Bert, Beam Rider, Enduro, MsPacman, Bowling, and River Raid. Environments follow the standard Atari protocols of incorporating sticky actions and only providing a terminal signal when all lives are lost. - A Stable-Baselines3 DQN policy

is trained on each game for 25 epochs of 100K time steps each, totaling 2.5M time steps or 10M frames due to frame skipping. The DQN policy takes an exploration action on 10% of time steps after being linearly annealed from 100% across the first 250K time steps. - A switching policy and monolithic policy were evaluated on the testbed using the greedy actions of the trained DQN policy when taking exploitation actions. Evaluations were made for 100 episodes for each game and epoch. The monolithic policy was $\epsilon$-greedy with a 10% exploration rate. The switching policy we chose to examine incorporates blind switching; we leave an analogous investigation of informed switching policies to future work (see initial study for background and experiments using informed switching policies). The policy begins in exploit mode and randomly switches to uniform random explore mode 0.7% of the time. It randomly chooses an explore mode length from the set $\{5, 10, 15, 20, 25\}$ with probabilities $\{0.05, 0.20, 0.50, 0.20, 0.05\} $. During experimentation, we determined that this switching policy took exploration actions at an almost identical rate as the monolithic policy (10%).

We briefly cite difficulties and possible confounding factors in our experimental design to aid other researchers during future studies on this topic.

- The DQN policy was trained using a monolithic policy, and unsurprisingly, monolithic policies had slightly higher evaluation scores. Additional studies may use exploitation actions from a policy trained with switching behavior for comparison.

- Many of our experiments aim to evaluate the effect of exploration or exploitation actions on some aspect of agent behavior. Due to delayed gratification in RL, the credit assignment problem

persists and confounds the association of actions to behaviors. To attempt to mitigate some confounding factors of this problem, we weight the behavior score of the agent at an arbitrary time step by the proportion of exploration or exploitation actions in a small window of past time steps; for example, in the first experiment, we weight the effect of taking exploration actions on yielding terminal states by calculating the proportion of exploration actions within 10 time steps of reaching the terminal state. Then, we average the proportions across 100 evaluation episodes to compute a final score for a single epoch for a single game. - Lastly, we only claim to have made observations about the behavioral differences, and we do not claim to have produced statistically significant results; we leave this analysis to future work.

Concentrated Terminal States

Exploration actions are generally considered to be suboptimal and are incorporated to learn about the state space rather than accrue the most return. Many environments contain regions of the state space that simply do not need more exploration, such as critical states

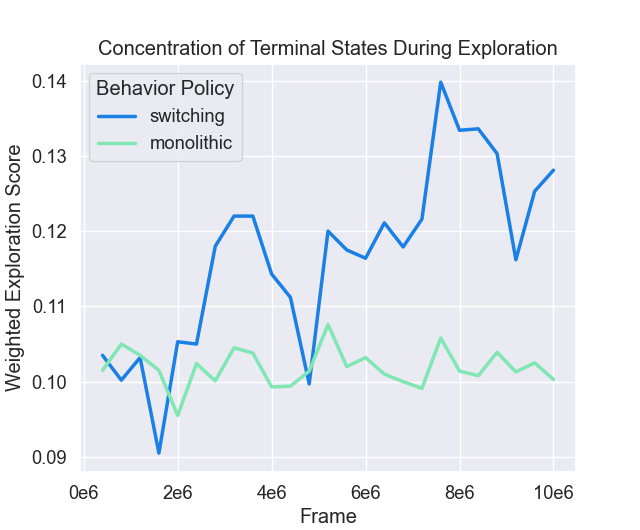

Our first experiment attempts to analyze the relationship between taking many exploration actions in succession and reaching a terminal state. Each terminal state is given a score equal to the proportion of exploration actions during the past 10 time steps (see second paragraph of Experiments section for rationale). Final scores for each behavior policy and epoch are computed by averaging the scores of each terminal state across all 100 evaluation episodes and each game. The results are shown in Figure 3. Switching policies produced terminal states that more closely followed exploration actions. Furthermore, the effect was more pronounced as the policies improved, most likely due to the increased disparity of optimality between exploitation and exploration actions that seems more detrimental to switching policies which explore multiple times in succession. Note how the scores for monolithic policies are near 0.10 on average, which is the expected proportion of exploration actions per episode and therefore suggests that exploration actions had little effect. These results demonstrate that switching policies may be able to concentrate terminal states to specific areas of an agent’s trajectory.

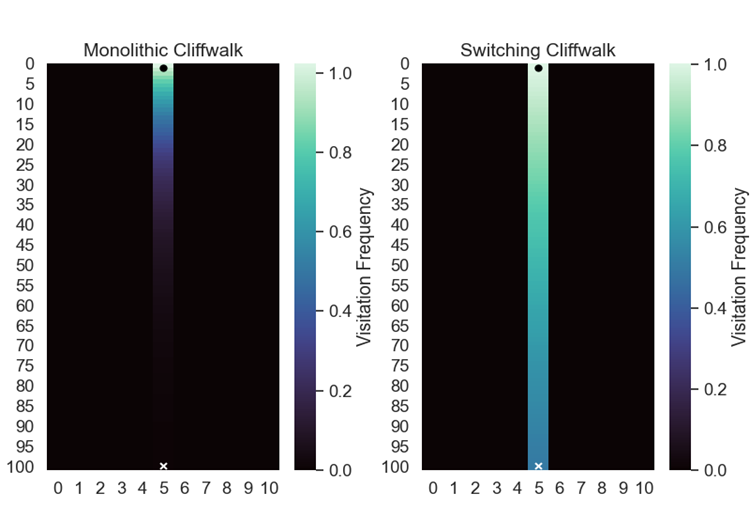

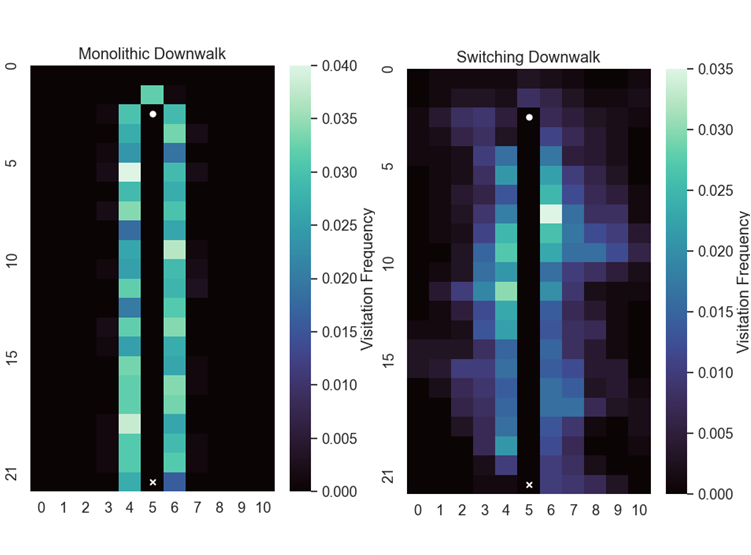

We showcase a quick illustrative example of the ability of switching policies to concentrate terminal states more uniformly in a cliffwalk environment (Figure 4). The agent starts at the black circle in the middle column and top row of a 101$\times$11 grid and attempts to reach the white ‘x’ at the bottom. All states aside from those in the middle column are terminal, and the heatmaps show the visitation frequency per episode of all non-terminal states across 10K episodes. When the exploitation policy is to move only downward and the behavior policies are the usual policies in these experiments, the agent incorporating a switching policy more heavily concentrates the terminal states in exploration mode and visits states further down the cliffwalk environment at a higher rate per episode.

Environments that incorporate checkpoint states that agents must traverse to make substantial progress may benefit from switching policies that concentrate exploration periods away from the checkpoints. For example, the game of Montezuma’s revenge

Early Exploration

Monolithic policies uniformly take exploration actions throughout an episode, and as a result, the exploration steps are less concentrated than those of switching policies. While the expected number of exploration steps may be the same per episode in monolithic policies, certain situations may require more concentrated exploration during the beginning of episodes. For example, the build orders in StarCraft II

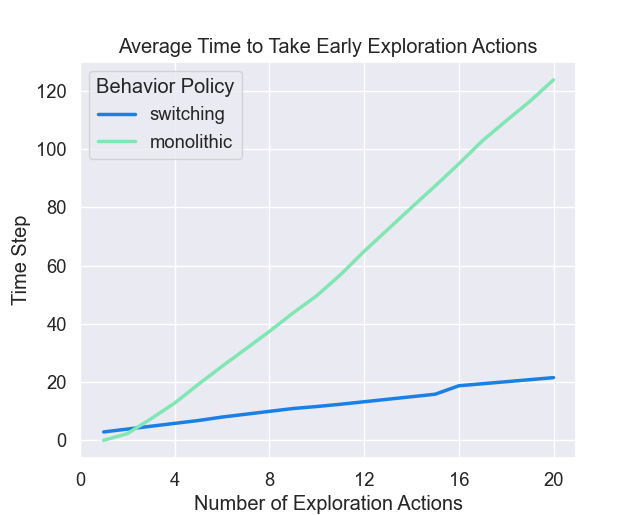

We perform an experiment to determine how quickly a policy takes a prespecified number of exploration actions. Specifically, we compute the average number of time steps it takes for a policy to take at least $x$ total exploration actions across its top 10 of 100 fastest episodes, and we repeat this process for $x \in \{1, 2, 3, \ldots, 20\}$. We compare the top 10 fastest episodes because we are only interested in gauging the flexibility of switching behavior of being able to achieve this specific facet of exploration (beginning exploration) during a small percentage of episodes and not for each episode. Note that this experiment did not need to utilize the Atari signals, so we only used data from the last epoch. Results were again averaged over each game and shown in Figure 5. It is clear that some episodes contain many more exploration actions concentrated in the beginning few time steps with switching policies. This makes sense intuitively, as only one switch needs to occur early in an episode with a switching policy for many exploration actions to be taken immediately afterwards. The difference increases roughly linearly for greater number of necessary exploration actions and shows that switching natively produces more episodes with exploration concentrated in the beginning.

We illustrate beginning exploration with a downwalk environment in which an agent attempts to first move to the middle column and then down the middle column to the white ‘x’ (Figure 6). The agent starts in the second row in the middle column at the white circle, and visitation frequencies across 1K episodes are shown for all states aside from those between the white circle and the white ‘x’, inclusive. We chose to analyze this environment because it is a crude approximation of the trajectory of agents that have learned a single policy and immediately move away from the initial start state at the beginning of an episode. The switching and monolithic policies are the same as before, and switching produces much higher visitation counts at states further from the obvious exploitation trajectory.

Environments that may benefit from flexible early exploration are sparse reward environments that provide a single nonzero reward at the terminal state. Many game environments fall into this category, since a terminal reward of 1 can be provided for a win, -1 for a loss, and 0 for a draw. In such environments, agents usually need to learn at states near the sparse reward region before learning at states further away, also known as cascading

Concentrated Return

In contrast to the investigation in the first experiment, exploitation actions of a trained agent are presumed to be better than all other alternatives. Since agents aim to maximize the expected return in an environment, exploitation actions often accrue relatively large amounts of expected return. For example, the initial experiments of DQN

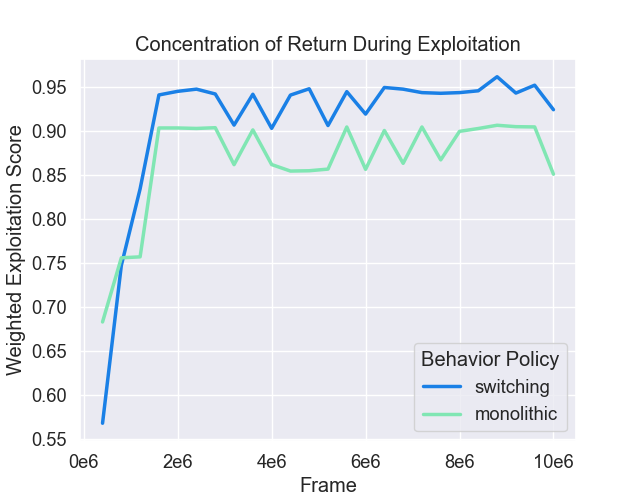

We perform an experiment to determine the proportion of return that is concentrated during exploitation periods. Each reward during an episode is weighted by the proportion of exploitation actions during the past 10 time steps. The score for each episode is the sum of weighted rewards divided by the total rewards. Scores for each behavior policy and epoch are computed by averaging scores across all games. The results are shown in Figure 7. Quite quickly, exploitation steps of switching policies contain a greater percentage of the return than those of monolithic policies. This trend seems fairly constant after roughly 2M frames, with switching policies having roughly 95% of the return in exploitation steps and monolithic policies having roughly 90% of the return; from another point of view, exploration steps yield 5% of the return for switching policies and 10% of the return for monolithic policies. These results agree with Experiment 1, as switching policies will generally reach terminal states more frequently in explore mode and will not receive more rewards. Since most of the rewards in our selected Atari games are positive, switching policies should accrue lower return while in explore mode.

One notable case in which exploitation steps are concentrated together is in resetting methods such as Go-Explore

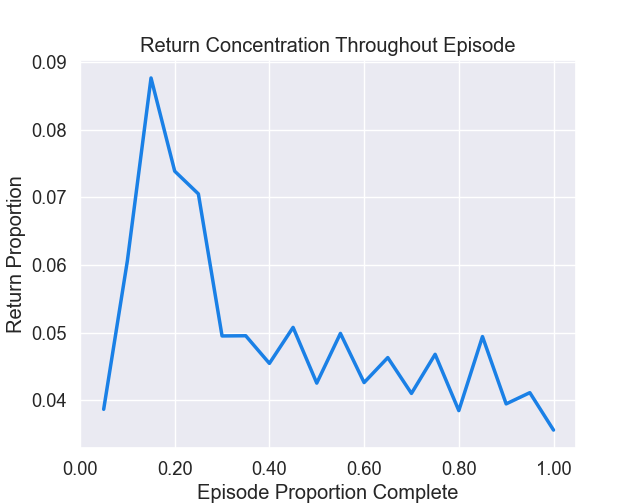

In Figure 8, we plot the proportion of episode return over the past 5% of the episode versus the current proportion of episode that is complete. Data is taken from the last training epoch. The results show that switching policies concentrate return more towards the beginning of each episode, most likely because its first exploit mode of switching policies is relatively long. Future work involves determining the extent to which the beginning exploitation mode of switching policies serves as a flexible alternative to resetting, which would have applications in situations that do not allow for manual resets such as model-free RL.

Post-Exploration Entropy

Monolithic policies such as $\epsilon$-greedy are nearly on-policy when any exploration constants have been annealed. In contrast, the exploration periods of switching policies are meant to free the agent from its current exploitation policy and allow the agent to experience significantly different trajectories than usual. Due to the lack of meaningful learning at states that are further from usual on-policy trajectories, the exploitation actions at those states are more likely to have greater diversity. In this experiment, we investigate the diversity of the action distribution after exploration periods.

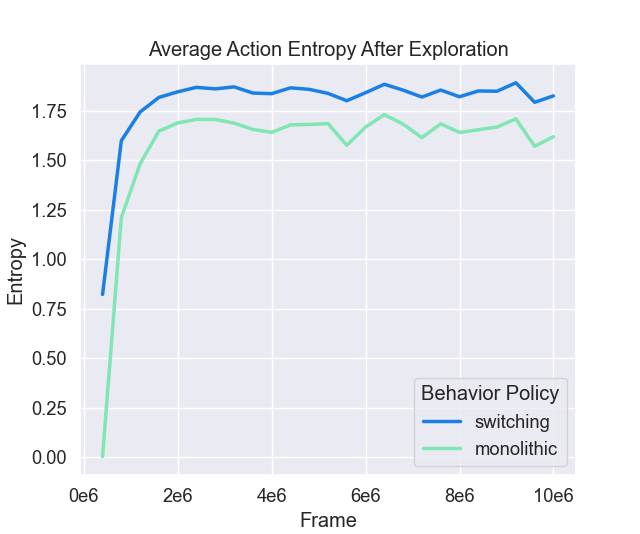

We quantify the diversity of the realized action distribution in the time step immediately after each exploration period. The diversity is quantified by entropy that has higher values for more random data and vice versa. An action distribution is constructed for each game and epoch, and the entropies across games are averaged. The results are shown in Figure 9. The entropy of the action distribution for switching policies is distinctly greater than that of monolithic policies. Like most of the previous results, this quantity only plateaus until roughly 2M frames have elapsed.

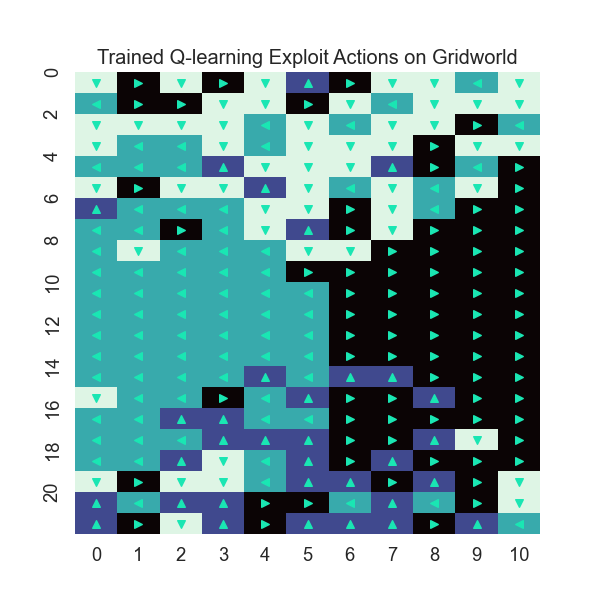

To illustrate this idea, we create a gridworld environment that provides the agent a reward of -1 for each time step that the agent is still on the grid; the agent’s goal is to leave the grid as quickly as possible. The agent begins in the center of the grid and learns through discrete Q-learning. Distinct actions have separate colors in Figure 10, with arrows showing the exploit action. The agent learns that it is fastest to exit the grid by going left or right. Notably, the actions near the top and bottom of the grid are seemingly random, as the agent has not seen and learned from those states as frequently as the others. Switching policies are more likely to reach the top and bottom areas of the gridworld state space and consequently would be more likely to have a higher entropy of the action distribution after exploration.

The difference in the entropy of the action distributions suggests that more diverse areas of the state space may be encountered after exploration modes with switching policies. This phenomenon is closely tied to the notion of detachment

Top Exploitation Proportions

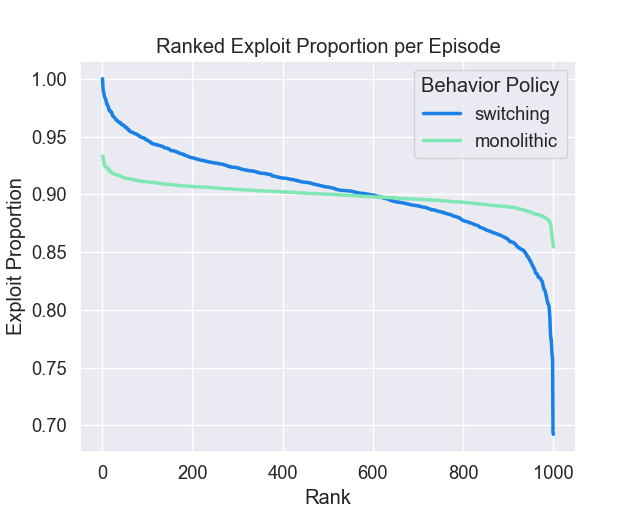

Our final investigation involves the change in exploitation proportion under switching policies. Since the probability of switching to explore mode is very low, there may be some episodes where the switch seldom happens if at all. This creates a distribution of exploitation action proportions per episode that is more extreme than that of monolithic policies, yet it is still not as extreme as using a single mode throughout the entire episode. Investigations of methods having similar interpolative characteristics have been conducted recently; for example, an action noise called pink noise

We perform an experiment to compare the return of the episodes with highest exploitation proportions between switching and monolithic policies. The returns of the top 10 of 100 episodes ranked by exploitation proportion of each epoch and game were averaged. Then, a ratio between the averages of switching and monolithic policies was computed and averaged across games. The results are plotted in Figure 11. There does not appear to be a clear trend aside from the ratio hovering mostly above 1.00, indicating that the top exploitation episodes of switching policies accrue more return than those of monolithic policies.

The results are best illustrated through plotting the switching and monolithic exploitation proportions for 1K episodes (10 games of the last epoch) as shown in Figure 12. The top 100 episodes with highest exploitation proportion take more exploitation actions than any monolithic episode. Therefore, the corresponding distribution is indeed more extreme.

While the previous discussion has illustrated that some switching episodes exploit more and generate more return, they don’t specifically explain why training with mode-switching is superior; in particular, the slightly greater return is not necessary for learning an optimal policy as long as a similar state distribution is reached during training. One possibility is the fact that mode-switching policies train on a more diverse set of behavior and must generalize to that diversity. Reinforcement learning algorithms are notorious at overfitting

3. Conclusion

This blog post highlighted five observational differences between mode-switching and monolithic behavior policies on Atari and other illustrative tasks. The analysis showcased the flexibility of mode-switching policies, such as the ability to explore earlier in episodes and exploit at a notably higher rate. As the original study of mode-switching behavior by DeepMind was primarily concerned with performance, the experiments in this blog post supplement the study by providing a better understanding of the strengths and weaknesses of mode-switching exploration. Due to the vast challenges in RL, we envision that mode-switching policies will need to be tailored to specific environments to achieve the greatest performance gains over monolithic policies. Pending a wealth of future studies, we believe that mode-switching has the potential to become the default behavioral policy to be used by researchers and practitioners alike.

Acknowledgements

We thank Nathan Bittner for a few helpful discussions on the topic of mode-switching exploration. We also thank Theresa Schlangen (Theresa Anderson at the time of publication) for helping polish some of the figures.