Masked Language Model with ALiBi and CLAP head

As a new approach to positional encoding, Attention with Linear Biases (ALiBi) uses linear biases of the attention weights to encode positional information, with capability of context length extrapolation. In their paper however, Press et al. focus on the perplexity of autoregressive decoder-only language models, leaving the question of downstream tasks and its applicability to encoder-attention open. In this blogpost, we attempt to bridge the gap by testing masked language models (MLMs) with encoder-attention ALiBi and prediction head similar to the counterparts of the original ALiBi models. We find that while simplified prediction head may be beneficial, performance of MLMs with encoder-attention ALiBi starts to deteriorate with 2048 sequence length at larger scales. We put our results in the context of related recent experiments and tentatively identify the circumstances more challenging to positional encoding designs. Finally, we open-source our MLMs, with BERT-level performance and 2048 context length.

Adapted and expanded from EIFY/fairseq.

Unmodified and unmasked, attention mechanism is permutation-invariant and positional encoding is therefore employed by transformer-based language models to break the symmetry and enable sequence modeling. In their ICLR 2022 paper, Press et al.

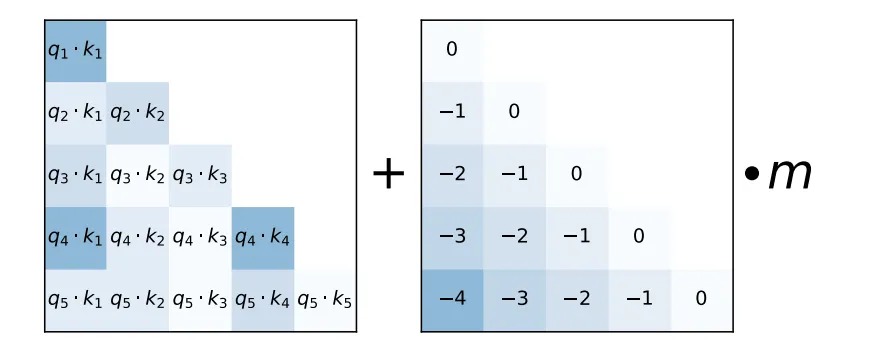

where \(m\) is a head-specific slope chosen to follow geometric sequence \(\frac{1}{2^{0.5}}, \frac{1}{2^1}, \frac{1}{2^{1.5}}, \dots, \frac{1}{2^\frac{n}{2}}\) for a model with \(n\) attention heads. This approach is shown to enable input length extrapolation in the sense that perplexity of the model remains stable as the inference context length exceeds training context length. The paper, however, focuses on autoregressive decoder-only models and relies on model perplexity as the metric, therefore leaves the question open whether ALiBi is applicable to MLMs like BERT

Attention with Linear Biases (ALiBi)

Since MLMs are based on encoders that attend to tokens both before and after the given position, considerations must be made regarding how to distinguish them. Press himself suggested the 3 following options for encoder-attention ALiBi:

- Symmetric: Keep attention weight bias proportional to the distance between tokens and rely on the context to distinguish between tokens at +N and -N position.

- Nonsymmetric, one-sided: Make half of the heads only attend to the tokens before and half of the heads only attend to the tokens after. Weight bias is still proportional to the distance.

- Nonsymmetric with different slopes: Make the slopes \(m\) different forward and backward, with either learned or fixed values.

With the observation that option 2 spends about half of the attention compute on no-op and option 3 can still result in bias value collision (e.g. \(m_{bwd} = 2 m_{fwd}\) and -1 vs. +2 positions), we implemented both option 1 and what we call “nonsymmetric with offset”: Shift the linear biases ahead by 0.5 * slope, i.e. the constant bias (right matrix of the figure above) becomes

0 -.5 -1.5 -2.5 -3.5

-1 0 -.5 -1.5 -2.5

-2 -1 0 -.5 -1.5

-3 -2 -1 0 -.5

-4 -3 -2 -1 0

Unless otherwise noted, ALiBi for the following experiments means this nonsymmetric-with-offset encoder-attention ALiBi.

Contrastive Language Pretraining (CLAP) Head

The prediction head is one part of the LMs that has received less attention that happens to differ between the ALiBi autoregressive decoder-only models and RoBERTa. Based on the configs and training logs, the ALiBi models use the adaptive word embedding and softmax of Baevski & Auli

class ClapHead(nn.Module):

"""Head for masked language modeling."""

def __init__(self, initial_beta, weight):

super().__init__()

self.beta = nn.Parameter(torch.tensor(initial_beta))

self.weight = weight

def forward(self, features, masked_tokens=None, normalize=True):

# Only project the masked tokens while training,

# saves both memory and computation

if masked_tokens is not None:

features = features[masked_tokens, :]

w = self.weight

if normalize:

w = F.normalize(w, dim=-1)

return self.beta * F.linear(features, w)Compared to the baseline RoBERTa prediction head

class RobertaLMHead(nn.Module):

"""Head for masked language modeling."""

def __init__(self, embed_dim, output_dim, activation_fn, weight=None):

super().__init__()

self.dense = nn.Linear(embed_dim, embed_dim)

self.activation_fn = utils.get_activation_fn(activation_fn)

self.layer_norm = LayerNorm(embed_dim)

if weight is None:

weight = nn.Linear(embed_dim, output_dim, bias=False).weight

self.weight = weight

self.bias = nn.Parameter(torch.zeros(output_dim))

def forward(self, features, masked_tokens=None, **kwargs):

# Only project the masked tokens while training,

# saves both memory and computation

if masked_tokens is not None:

features = features[masked_tokens, :]

x = self.dense(features)

x = self.activation_fn(x)

x = self.layer_norm(x)

# project back to size of vocabulary with bias

x = F.linear(x, self.weight) + self.bias

return xWe removed the embed_dim x embed_dim fully-connected layer, activation function (GELU), layer norm, and the output_dim trainable bias. Just like CLIP, we added the trainable thermodynamic beta and L2-normalize the token embeddings before feeding them to the transformer and computing the inner products between them and the transformer output as the softmax logits, scaled by beta.

Experiments

WikiText-103

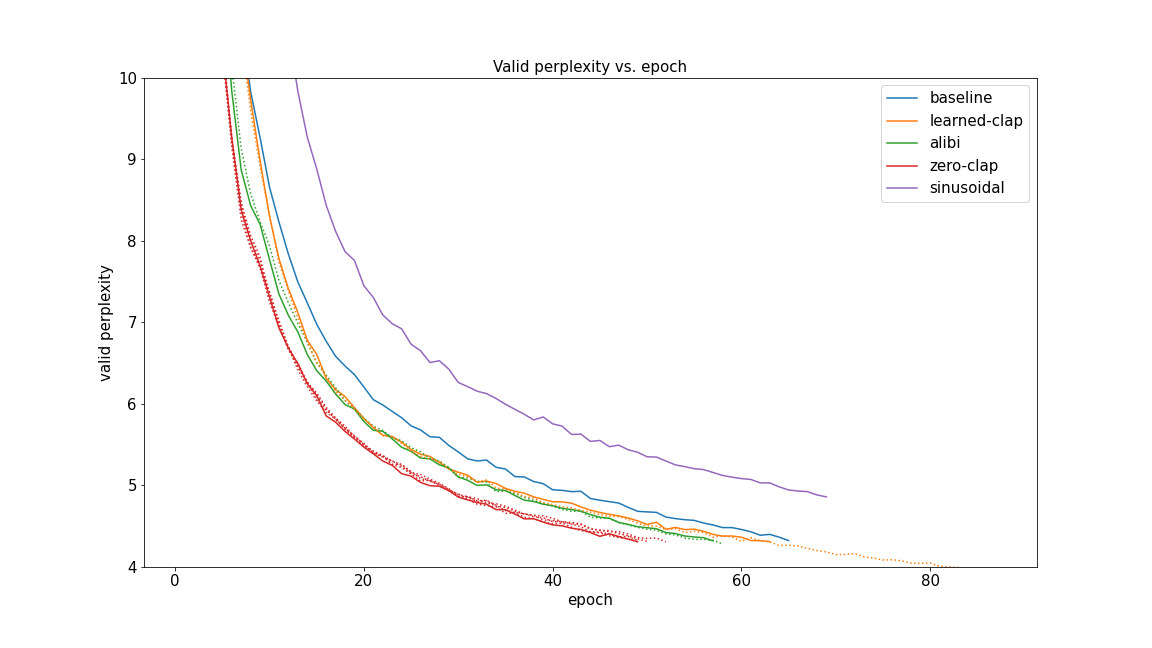

At first we tested the changes with the WikiText-103 dataset

where solid lines are what’s considered “canonical” setup and dotted lines are experiments with the following variations in setup. These variations turned out to be irrelevant:

- Whether we use attention dropout or not

- Whether we use symmetric ALiBi (option 1) or nonsymmetric-with-offset ALiBi above

-

Whether we use zero vector or a separate learnable embedding for the mask embeddingThe intention was to test using zero vector instead of a separate learnable embedding for the mask embedding, which in combination with ALiBi results in no non-semantic information in the input embeddings. However, a bug prevented this variation from working correctly and the end effect was merely deleting the last two words (madeupword0001 and madeupword0002) from the dictionary instead, which we don't expect to be consequential. - Whether we L2-normalize the embeddings for the CLAP head or not

- Whether we scale the L2-normalized embeddings by

sqrt(embed_dim)(no_scale_embedding=False) or not

As we can see, the dotted lines are almost on top of the solid lines. Notably, sinusoidal positional encoding underperforms significantly compared to learned positional encoding.

The Pile

As the next step, we scaled our experiments to train on the Pile roberta.base with 125M parameters and roberta.large with 355M parameters. These experiments were performed on 8 x A100 40GB SXM4 GPUs, where the roberta.base experiments took ~3 days and roberta.large experiments took ~9 days. In the table below, PPL is the final validation MLM perplexity, STS-B is the best validation loss, and all the others are the best validation accuracies over 10 epochs of finetuning.

roberta.base

PPL↓ CoLA MNLI MRPC QNLI QQP RTE SST-2 STS-B↓

baseline 2.94 83.6 84.2 90 91.6 91.3 73.6 92.1 0.028

learned-clap 2.86 81.7 84.4 86.3 90.9 91.2 72.6 92.5 0.027

alibi 2.93 69.2 85.1 80.9 92 91.5 63.9 93.1 0.033

zero-clap 2.83 70.5 84.9 75.5 90.6 91.1 54.9 89.7 0.041

*Baseline but with sinusoidal positional encoding instead of learned positional encoding failed to converge.

roberta.large

PPL↓ CoLA MNLI MRPC QNLI QQP RTE SST-2 STS-B↓

baseline* 2.55 83.7 86.8 84.3 92.5 91.8 79.8 93.3 0.027

learned-clap 2.5 84.1 86.3 89.7 92.8 91.7 79.8 93.7 0.023

alibi 2.65 69.1 86.5 68.4 92.4 91.7 52.7 93.6 0.123

zero-clap 2.54 69.1 86.7 81.9 92.2 91.6 52.7 93.1 0.031

*Loss spiked somewhere between 24000-24500 updates and the model failed to recover. Loosely following the practice of 5.1 Training Instability in the PaLM paper 1 to 2.

We found that ALiBi no longer helps lowering the validation MLM perplexity. Furthermore, ALiBi turned out to be harmful for several specific GLUE tasks (CoLA, MRPC, and RTE). CLAP head on its own, however, seems to be competitive and in fact outperforms the baseline with roberta.large.

Conclusions

This seems to be another case where models with lower perplexity do not necessarily yield higher accuracies for downstream tasks and architectural changes beneficial for models at smaller scales do not imply the same for models at larger scales

In the broader context, MosaicBERT [CLS] token. Lee et al. evaluate LittleBird models on a collection of QA Benchmarks for both English and Korean and report favorable performance, but leave the question open whether they work well for other NLP tasks. Notably, we also found our ALiBi models capable of matching the baseline performance of the question answering task QNLI, so the reported performance is compatible with our experiments even without attributing to the other differences in architecture or pretraining task.

Finally, what can we say about the original decoder-attention ALiBi and positional encodings in general? The original decoder-attention ALiBi has been shown to help not only perplexity, but also performance on evaluation suites consist of a diverse set of tasks like the EleutherAI Language Model Evaluation Harness

- Decoder-attention positional encodings really should be considered causal mask + additional encodings and how they complement each other should be taken into account.

- Longer context length and certain downstream tasks are more challenging for positional encodings. One worthwhile direction may be to rank their difficulties systematically and iterate on the more challenging circumstances first for future positional encoding designs.

Model checkpoints

Final checkpoints for models trained on the Pile:

roberta.base

baseline learned-clap alibi zero-clap

roberta.large

baseline learned-clap alibi zero-clap

To load them, install EIFY/fairseq following the original instructions and download the GPT-2 fairseq dictionary:

wget -O gpt2_bpe/dict.txt https://dl.fbaipublicfiles.com/fairseq/gpt2_bpe/dict.txt

Then all of the checkpoints above except the zero-clap ones can load as follows:

$ python

Python 3.8.10 (default, Jun 22 2022, 20:18:18)

[GCC 9.4.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> from fairseq.models.roberta import RobertaModel

>>> roberta = RobertaModel.from_pretrained('/checkpoint-dir', 'learned-clap-large.pt', '/dict-dir')

(...)

>>> roberta.fill_mask('The capital of China is <mask>.', topk=3)

[('The capital of China is Beijing.', 0.7009016871452332, ' Beijing'), ('The capital of China is Shanghai.', 0.23566904664039612, ' Shanghai'), ('The capital of China is Moscow.', 0.010170688852667809, ' Moscow')]

>>>

The zero-clap ones were trained without the last two madeupword’sdict.txt before loading, i.e.:

(...) 50009 0 50256 0 madeupword0000 0madeupword0001 0 madeupword0002 0

$ python

Python 3.8.10 (default, Jun 22 2022, 20:18:18)

[GCC 9.4.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> from fairseq.models.roberta import RobertaModel

>>> roberta = RobertaModel.from_pretrained('/checkpoint-dir', 'zero-clap-large.pt', '/dict-dir')

(...)

>>> roberta.fill_mask('The capital of China is <mask>.', topk=3)

[('The capital of China is Beijing.', 0.7051425576210022, ' Beijing'), ('The capital of China is Shanghai.', 0.21408841013908386, ' Shanghai'), ('The capital of China is Taiwan.', 0.007823833264410496, ' Taiwan')]

>>>

The rest of the original example usage should also just work. While these checkpoints have only been tested with this fork, the baseline ones should also work with the original fairseq repo with minimum changes to the state dict:

>>> path = '/checkpoint-dir/baseline-large.pt'

>>> with open(path, 'rb') as f:

... state = torch.load(f, map_location=torch.device("cpu"))

...

>>>

>>> del state['cfg']['task']['omit_mask']

(...)

>>> torch.save(state, '/checkpoint-dir/compatible.pt')